June 5, 2024

By Jeremy A. Sykes

Three guys walk into a bar wearing three different hats, the bartender looks around at the men and says, "What is this, a hat trick?"

If that joke surprised you in any way or wasn’t what you were expecting when you clicked this link, then you’ve just experienced something psychologists call belief-inconsistent surprise. You probably assumed this article would start differently, perhaps more like “A team of brain researchers at the University of Chicago led by Monica Rosenberg has…” That is, you had a belief, or an expectation, based on evidence and prior learning of what you’d find when you started to read this page. But Rosenberg’s lab has demonstrated that you can literally measure surprise with current brain scanning technology.

If you know anything about the brain, it probably relates to old horror tropes about directly stimulating parts of the brain to initiate movement in the subject. Simple, gross motor skills that might make you move your finger, or make you flinch. You’ve probably heard about chemicals that produce effects, like dopamine, or serotonin that can make you feel pleasure or happiness. Unsurprisingly, neuroscience has moved forward considerably, and researchers are delving into much more complicated feelings, thoughts, and processes.

A team of brain researchers at the University of Chicago led by graduate student Ziwei Zhang and assistant professor Monica Rosenberg has created a machine learning model in which researchers can predict the surprise in individuals and small groups to various kinds of stimuli across two entirely different fMRI datasets. The University of Chicago’s Research Computing Center (RCC) provided the computational muscle for Rosenberg’s Cognition, Attention, and Brain Lab to delve into the mysteries of why we pay attention, stay attentive, and learn new things. But before we can predict surprise, we must learn how to measure it. And once we’ve measured it, model it.

The 'Oh Rho!' Factor

To measure surprise, researchers must first define it, then they must simulate surprise in test subjects in a controlled environment. But watching faces, looking for the tell-tale signs of surprise can be unreliable, as pupil size and facial expressions are often different between subjects and situations. A much more consistent manner is to perform an fMRI. Using a Spearman correlation (measured in the Greek letter Rho), the fMRI results were measured against estimates of how surprised a person should be at different times during the brain scan—that is, a belief-inconsistent surprise time course. Belief-inconsistent surprise has to not only contradict the existing environment, it has to do so enough such that change or learning can occur.

People are sensitive to surprise signals, and if your prediction is not in line with reality you are more motivated to learn and process new information to add to your understanding of the current environment, said Rosenberg.

Zhang and Rosenberg began with two publicly available datasets featuring thousands of brain scan images from dozens of individuals, taken by two different sets of researchers. The team was able to corroborate their model on both datasets–an unusual feat–lending even more gravitas to the team’s results.

While such massive datasets may have been an impediment to prior generations of scientists, they are more accessible now to researchers with access to High Performance Computing (HPC) resources, such as the RCC’s Midway machine. Massive datasets are also a massive pain in the neck to transport. RCC’s team works frequently with the labs to help transfer “Big Data” from national datahubs like the NIH. In this case, however, the images themselves were weighty and the Midway HPC cluster took the images and compared them sequentially to one another, mapping the distance between notable points in each image and turning them into statistical data.

The RCC was able to assist the team in multiple ways--upfront. Storing the data and mounting the machines to the team’s computers meant that the statistical analyses could go much more quickly. The main software, AFNI (which stands for Analysis of Functional Neuro Images) is a Unix based software, ideal for communicating with big computers like Midway. The RCC and Ziwei worked together to get the software running and linked to the datasets. The lab had developed a “pipeline, automating this process by creating and submitting jobs that call AFNI, and execute those steps in parallel, so that all participants’ data are preprocessed at the same time.” Said Zhang. If the team had only been able to run one participant at a time, there would have been a bottleneck. Likewise, when it was time to begin the statistical analyses, RCC’s Midway was able to run each calculation thousands of times, an analysis just not possible on lab computers.

In both brains and computers, the points of interest–particular groups of neurons for example– are often called nodes. When comparing two or more nodes, network neuroscientists call that an “Edge”. The data, once created and assembled, was run through a machine learning algorithm the team developed called the Edge-Fluctuation-Based Predictive Model.

Graduate student Ziwei Zhang, and primary investigator Dr. Monica Rosenberg, photos from Cognition, Attention & Brain Lab

Graduate student Ziwei Zhang, and primary investigator Dr. Monica Rosenberg, photos from Cognition, Attention & Brain Lab

Getting an Edge through Gaming

Though space is limited in an fMRI, certain tasks can still be performed, particularly if the participant’s head remains still. Imagine putting your head in a giant roll of toilet paper and you’re not far off. So in both cases, participants were presented with small view screens while in the device itself. The first dataset had thirty-two participants perform an adaptive learning task designed like a video game. Essentially, the patient would try to catch bags of coins into a bucket, dropped from the top of the screen by an unseen helicopter. The bags drop fast enough that the user doesn’t have much time to position the bucket so participants have to use the position of the last bag drop and a general sort of average to predict where the next drop might occur.

The second dataset was from a more naturalistic context, twenty volunteers were asked to simply watch a portion of nine basketball games played on the screen while getting scanned. A Notre Dame vs. Xavier men’s college basketball game, or portions thereof, was played to the captive fMRI audience. Events in the game were coded, including changes in possession, baskets, penalties, three-pointers and more. The fMRI results were then read and matched to the coded play-by-play. “In these basketball games, any violation of expectation could trigger a surprise response in the brain.” Said Zhang.

Models Predicting the Unpredictable

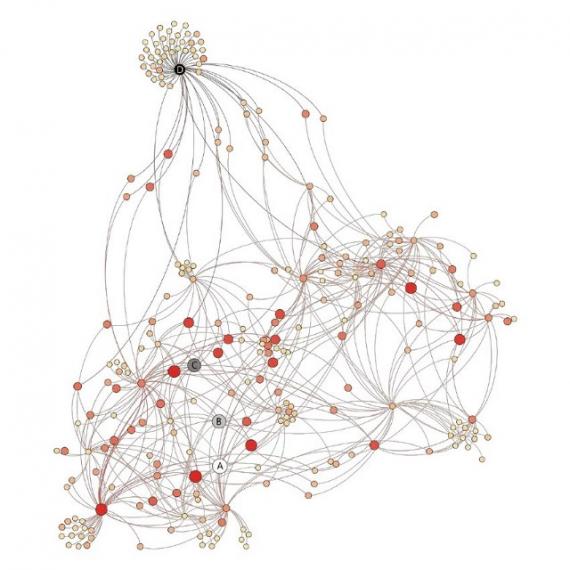

A look at the figure above shows that regardless of how surprised an individual might be, the neurological phenomena it represents is wide-ranging and complicated. Unlike those black and white horror movies, no simple prod to a discrete fold of the brain that makes a finger move will generate something as complicated as surprise. Surprise involves an expansive network of nodes and edges–covering the entire brain. With such complicated data, how can scientists make any definitive claims about the nature of the brain? Says Rosenberg, “The idea of motor tapping is very specific, but attention, encoding to memory, being surprised, or understanding a story involves much more complicated processes. We can’t yet tell you why each of these individual brain connections is related to surprise. But demonstrating that together they robustly predict surprise across different datasets and situations is important, and from that basis we can make educated guesses based on what is happening.”

The EFPM or Edge-Fluctuation-Based Predictive Model, uses brain edge dynamics, showing that it could be possible to “translate” surprise to other situations and different contexts. The result provides further verification of the complex neural relationship of certain brain networks, demonstrating connections between the posterior cingulate cortex, frontoparietal network and the limbic system.

Previous work at Rosenberg’s lab had focused on “focus.” In particular, what the brain looks like when people are doing something that requires attention, for example, watching a movie. “My background is in studying how our attention fluctuates from one moment to the next.” In this research, another graduate student from Rosenberg’s group, Hayoung Song, had found a complex weave of connections that predicted how engaged people were in the narratives. Intriguingly, they found that two people watching the same movie became in tune with one another, experiencing similar patterns. Building on this research, Zhang asked what our brains would look like when people were surprised “together.”

How does the fMRI actually work?

Brain scans are collected using an MRI scanner. To measure changes in brain activity, researchers can use a process called BOLD or Blood Oxygenation Level Dependent imaging. The fMRI works because the brain is one of the most blood-thirsty organs. Picture a network of capillaries shipping blood, (the smallest of which are called venules) on either side of a neuron.

Oxygen rich blood goes in one side, less oxygenated blood (deoxyhemoglobin) comes out on the downstream side. But when the neuron is activated, it needs more oxygen, sending out signals that stimulate the overproduction of oxyhemoglobin. That excess oxygen means that even the downstream venules contain oxygen–creating bright spots on the readout. These bright spots, are recorded in a time series with roughly 1-2 seconds between, compiling almost four thousand detailed images.

Learning about Surprise with Surprise Learning

Zhang and Rosenberg found that fMRI activity, or BOLD signal, in individual brain regions alone is not a great predictor for surprise, unsurprisingly perhaps, as these independent data do not show the relationships between brain areas, which is why EFPM is so important. Instead, they found that these relationships, or edges, predicted surprise as people did the learning task and watched the basketball games. Zhang described brain networks, relating them to what we’ve learned about social networks, “if we think of people as nodes in a network, then the interactions between those people are what we call edges. We get a much fuller picture of communities by considering not just individuals, but also their relationships. The same is true of the brain.”

Nodes on a social network visualization, Knutas, Hajikhani and Salminen, 2015. DOI: 10.1145/2812428.2812442

Nodes on a social network visualization, Knutas, Hajikhani and Salminen, 2015. DOI: 10.1145/2812428.2812442

Zhang and Rosenberg used a technique called computational lesioning analysis to understand how important different brain edges were for predicting surprise. Computational lesioning analysis has its roots in the physical phenomena of brain lesions, in which a patient, through trauma or perhaps infection, develops a brain injury that interferes with signals in that region of the brain. Seeing what is missing often helps narrow our focus on what is actually happening. When an fMRI takes a full brain picture, scientists can use lesioning analysis to remove signals they don’t want to see at that moment. With these comparisons they can make more informed estimates about the relationships between each region.

Rosenberg’s team will continue to use and refine the Edge-Fluctuation-Based Predictive Model. Belief inconsistent surprise is all about sudden environmental change, and understanding the neuroscience about how people react to such stimulus would be invaluable. Predicting surprise could assist in behavioral learning and more broadly across psychology, but it could also be useful in areas as diverse as artificial intelligence, disaster planning or political polling. Now that they know the model can provide a prediction on that metric, further work can be done to compile data on how surprise works in specific situations, like watching a film or driving a vehicle. The Cognition, Attention, and Brain Lab continues to take advantage of RCC resources and expertise, and further collaborations using advanced computational methods to advance their study of neuroscience is imminent.

For Rosenberg, this work ignites a series of eye-opening follow-ups. Namely, what comes after this first transient surprise signal? “Does it push us into a more engaged brain state, facilitating subsequent learning? I'm also curious to understand how and why people's neural signals of surprise to the same information can differ. What does that tell us about how they understand and learn from the current situation?”

Zhang observed that the Twitter AI, Grok, was unperturbed, describing Rosenberg lab’s latest paper. “Changes in how your brain parts talk to each other can tell us when you'll be surprised, no matter what you're doing.” Clearly, artificial Intelligence could use some lessons on the value of being surprised!